Interview Gijs Dubbelman

Gijs Dubbelman is assistant professor at the department of Electrical Engineering, where he heads the Mobile Perception Systems research cluster. He is also involved in the work of the Eindhoven Artificial Intelligence Systems Institute (EAISI). Artificial Intelligence plays a crucial role in Gijs's research. A short introduction in five questions and answers.

What is your key research question?

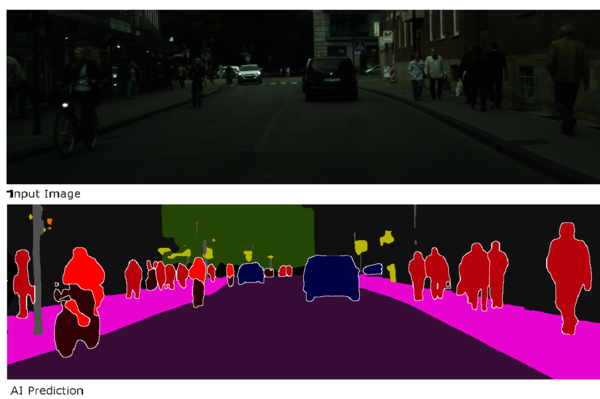

My team from the Mobile Perception Systems lab designs intelligent systems that can move through the world without any human intervention. Think of autonomous robots or self-driving cars. To make this possible, we have to solve two problems. First, we have to learn these systems to perceive and understand the world as it is, and secondly, based on this digital model, we have to learn them to reason what will happen in the future. Much of our current work goes into perfecting the perception part. For this we use deep learning algorithms, which are able to reduce images of the multi-faceted world around us into a number of essential categories, which the car or robot needs to ‘see’ in order to function. Think of roads, road signs, other traffic, humans, etc. The less data we need to do this, and the more efficient and robust this process, the quicker the car or robot is able to move around without causing damage or harming anyone.

What is the MAIN challenge in your work?

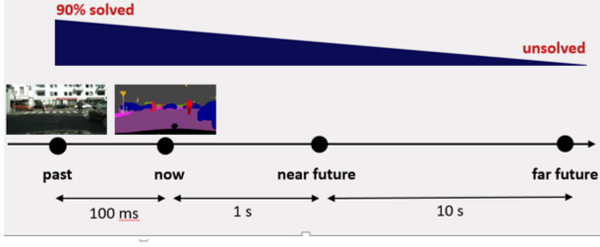

The main challenge lies in taking the next step from perception to reasoning. In other words, how can we learn cars and robots to anticipate future events from sensory data from the past. Think of a car that ‘understands’ that a pedestrian that it ‘sees’ on the side of the road is about to cross to catch a bus stopping on the other side, and responds by braking, preventing a possible accident. At present, we are able to predict roughly up to 1 second in the future, which of course is not enough. In the real world, the time horizon should be several times larger for dynamic and complex environments like European inner cities.

The main bottleneck here are the neural networks that we have presently at our disposal, which are still limited to very specialist tasks. This means that they can predict the future reasonably well, but only within certain quite specific constraints. Think of a robot taxi that only runs on certain known routes, where there is less traffic. What we’re trying to achieve is to develop AI algorithms that are truly generalizable.

What are the practical applications of your research? How does it benefit society?

The research we do has applications across a wide range of industries. For instance we worked together with an industrial bakery that needed smart robots which were flexible enough to cope with changing configurations of their machines. We also collaborated with NXP on ‘AI-friendly’ processors for the automotive industry, and with TomTom on smart maps and localisation. Our work on perception also finds applications wherever machines need to interact with humans.

How do you see the development of AI in the future?

Based on the tremendous progress in deep learning over the past few years, I expect that by 2030 AI will have become an integral part of our everyday lives. Think of surveillance cameras with facial recognition, and personalized digital devices and home appliances. I also expect big strides on the way from perception to reasoning, once we are able to formulate neural network-friendly models to represent our world, its objects, and the relations between them.

For example, Convolutional Neural Networks are well suited to process images but knowledge needed for reasoning is best represented in graphs. Hence, current research focusses on designing graph-structures that are network-friendly and, vice versa, network architectures that are graph-friendly.

As for fully autonomous driving or general AI, I think that’s still some way off. So I don’t see any need for dystopian fearmongering about AI. Of course, there are risks, especially in automatic decision making that directly affects people, for instance in job recruitment or policing. That’s why I think AI should always try to be transparent and explainable, and should involve some degree of human intervention.

Why should any AI researcher want to work at the ������ý?

I think that anyone interested in AI who wants to solve real problems in the real world, should feel right at home at the ������ý.

Help us make machines smart

Are you interested in the work of EAISI? Want to join Gijs in his work on making machines understand the world? Either as a student or an academic? Check out what TU Eindhoven has to offer.